Executive Summary

Speculative microarchitectural side-channels have been prevalent over the last several years, the most predominant being variants of Meltdown and Spectre. Another unique and harmful side effect of speculative execution is its influence on power side-channels. This technical report details how leakage can occur through power side-channels due to speculative execution. Specifically discussing:

- How speculative execution creates the potential for power side-channel leakage

- Tangible observations of power side-channel leakage using data collected from an Arm Cortex-M4 on the ChipWhisperer side-channel attack platform

- A unique weaponization of the leakage using machine learning techniques

Introduction

In this report we describe an approach for data extraction from speculated instructions inside an Arm Cortex-M4 on an STM32F303 SoC via power side-channel analysis (PSCA). In our test scenario, a branch with a privileged branch-not-taken is executed in the unprivileged (branch-taken) condition in a similar manner as other speculative execution based attacks like Spectre. This pulls privileged information into the processor. When the instruction is squashed due to the branch miss, the processor pipeline leaks privileged information recoverable by PSCA. In order to exploit this leakage, we train a multi-class nonlinear support vector machine with validation-set accuracy highly correlated with Hamming distance between processor states. While typical PSCA attacks require thousands of measurements, our results show that using a single sequence of measurements over time (a single power trace) the squashed values can be distinguished up to a confusion factor. We show that leakage exists whether or not these data movements result in permanent or transient state change, and that this leakage is hidden from software. This implies running PSCA-resistant software on devices with different microarchitectures could invalidate countermeasures without a software programmer’s knowledge.

Hypothesis

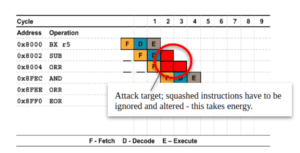

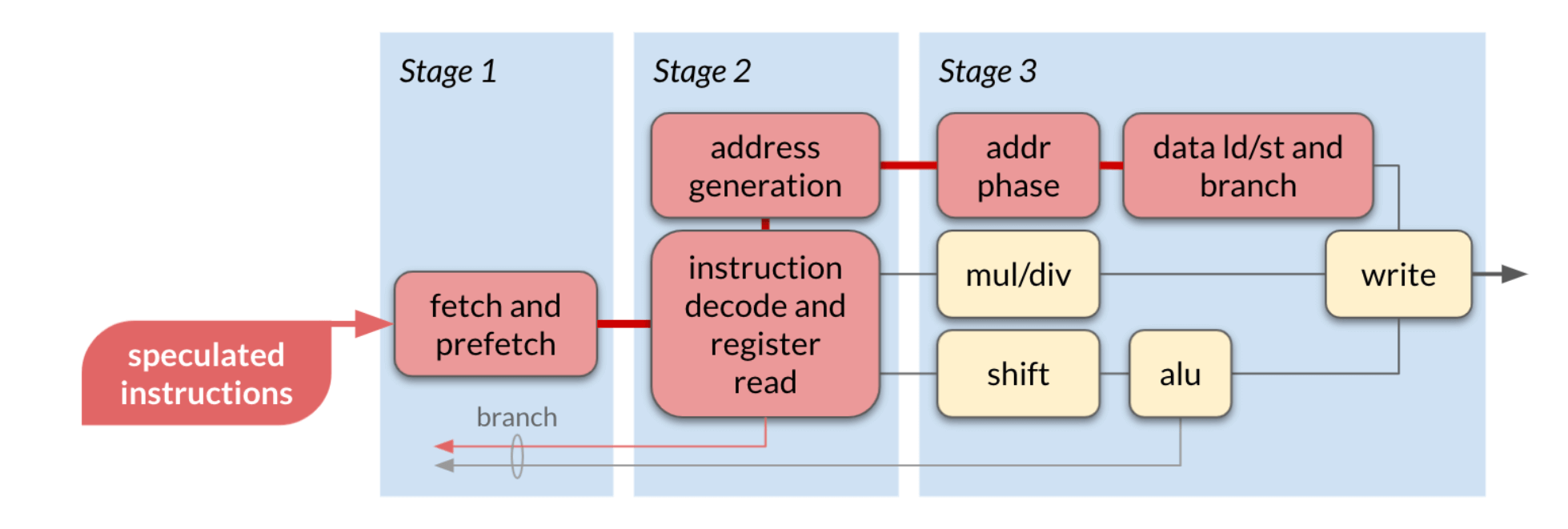

Our motivating insight is that when bubbles are inserted into a processor pipeline these bubbles are not homogeneous with respect to power consumption. Instead, they are composed of information that was in the pipeline prior to invalidation. In absence of countermeasures, any change in data requires power that is potentially visible as a voltage drop across the power supply rails. Figure 1 below shows a modified representation of a 3-stage pipeline with a bubble induced by an indirect branch taken. We have annotated this diagram to highlight the cells whose operations must be ignored, i.e. the operations that compose the bubble. If our hypothesis is correct, then we should be able to collect data with differing squashed operations and distinguish them in a profiled PSCA attack.

Figure 1: An annotated 3-stage pipeline diagram with a bubble inserted during an indirect branch taken.

Threat Model

We assume an attacker with the capability to introduce array offsets into software on the target SoC and to collect power traces over arbitrary execution intervals with a sample rate at least four times the clock rate of the target.

Design of the Experiment

The experimental platform is a ChipWhisperer-Lite (CW1173) board (Figure 2) with an integrated STM32F303 attack target. A Spartan-6 FPGA (left-center) programmed with OpenADC – an open-source A/D converter – serves as a capture device in conjunction with an Atmel ATSAM3U2C and has a sample rate four times that of the SoC clock. The target is visible on the right of the board below. The capture device and target share a clock. This improves the alignment of point of interest (POI) in measurements across multiple runs of the same firmware on the target device. For the purposes of this experiment, caches are deactivated on the target SoC.

Figure 2: ChipWhisperer-Lite (CW1173)

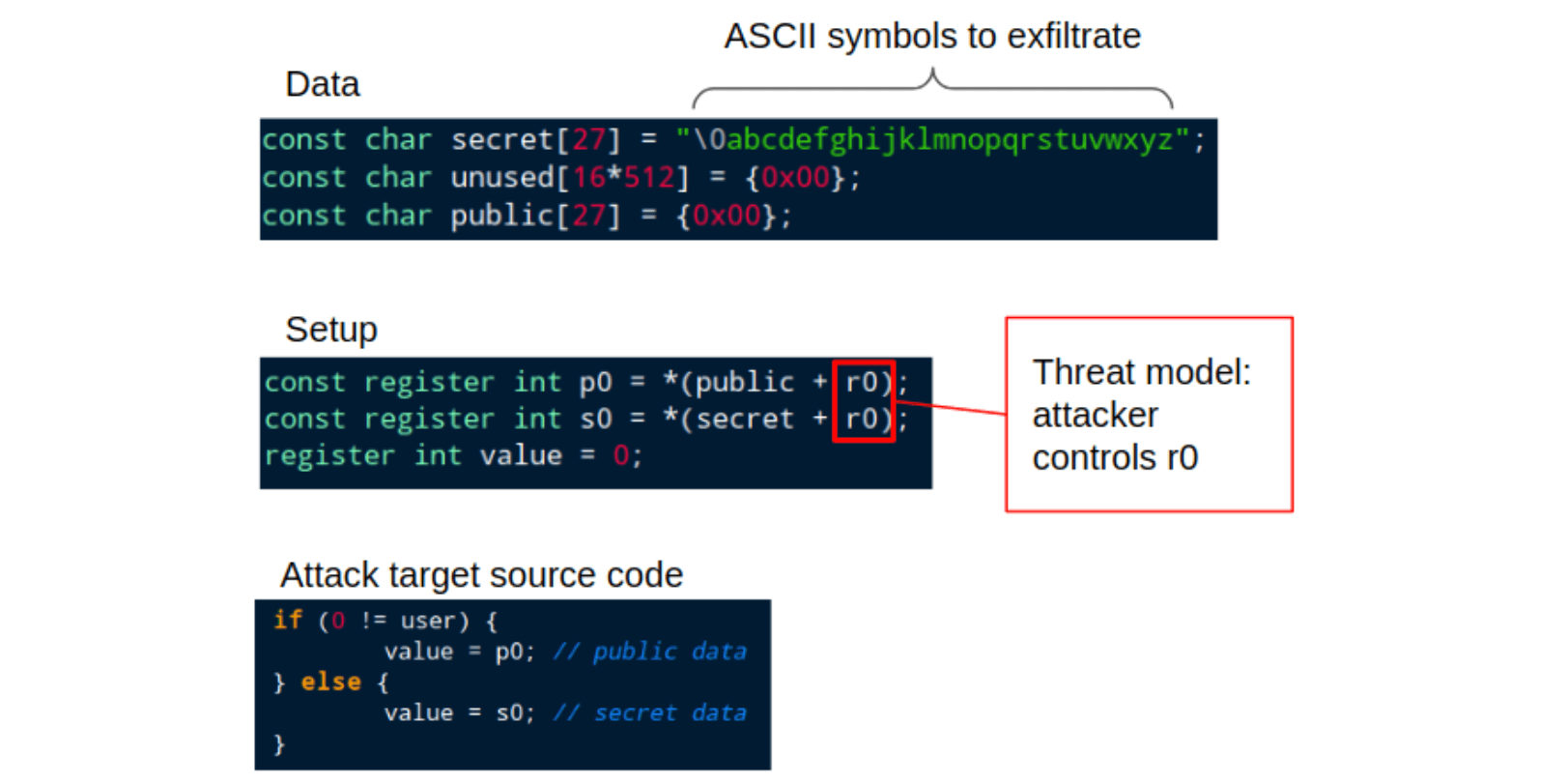

Figure 3 below shows the target firmware. The Data section shows secret and public arrays with an unused zeroized region between secret and public (also zeroized) arrays to prevent locality effects. The Setup section shows an artificial scenario in which both public and secret information are loaded into the Arm Cortex-M4 registers, where an attacker has control of the offset r0. The Attack target source code shows a vulnerable section of code in which a register variable, value, is set to either a secret value, s0, or a public value, p0, contingent on the value of user. user represents the privilege level – not enforced by hardware – of the caller of the function, and we assume that the value of user is not under control of the attacker. Nops are inserted before and after the attack target code to aid visual inspection of disassembly and power traces.

Figure 3: Relevant portions of C source of the target firmware. See body text for details.

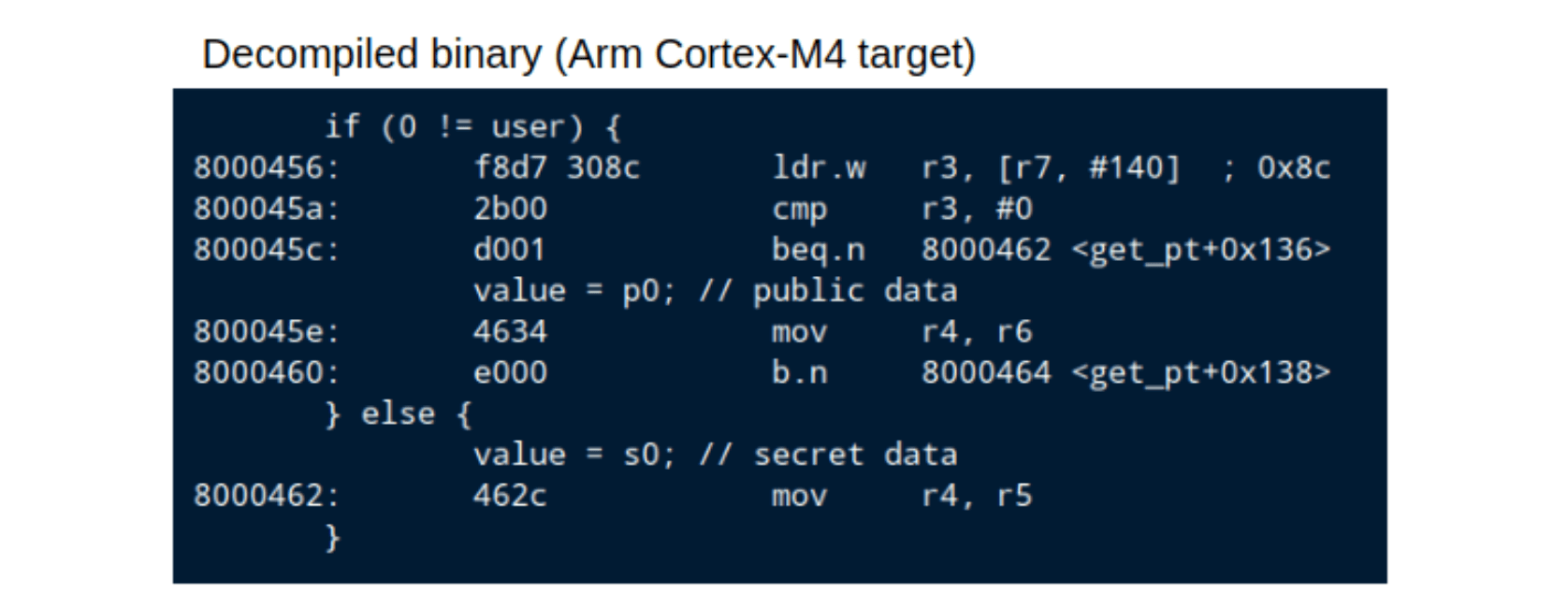

We validate that the C source code in Figure 2 produces the intended binary by disassembly and inspection. See Figure 4 below.

Figure 4: Disassembly with C debug annotations of attack target source code from Figure 2.

Discovery

We collect 27K power traces, 200 samples long, when 0 != user and, as a control, when 0 == user. Note that 1 == user for all traces in the 0 != user condition. During 0 != user, we expect a pipeline bubble to occur containing data from the secret array due to the taken branch. As a control, we also collect 27K for 0 == user, and for these control data we do not expect a pipeline bubble. Each of these 27K further breaks down into 27 classes of 1K, each class corresponding to one of the values in the secret array (Figure 3 top).

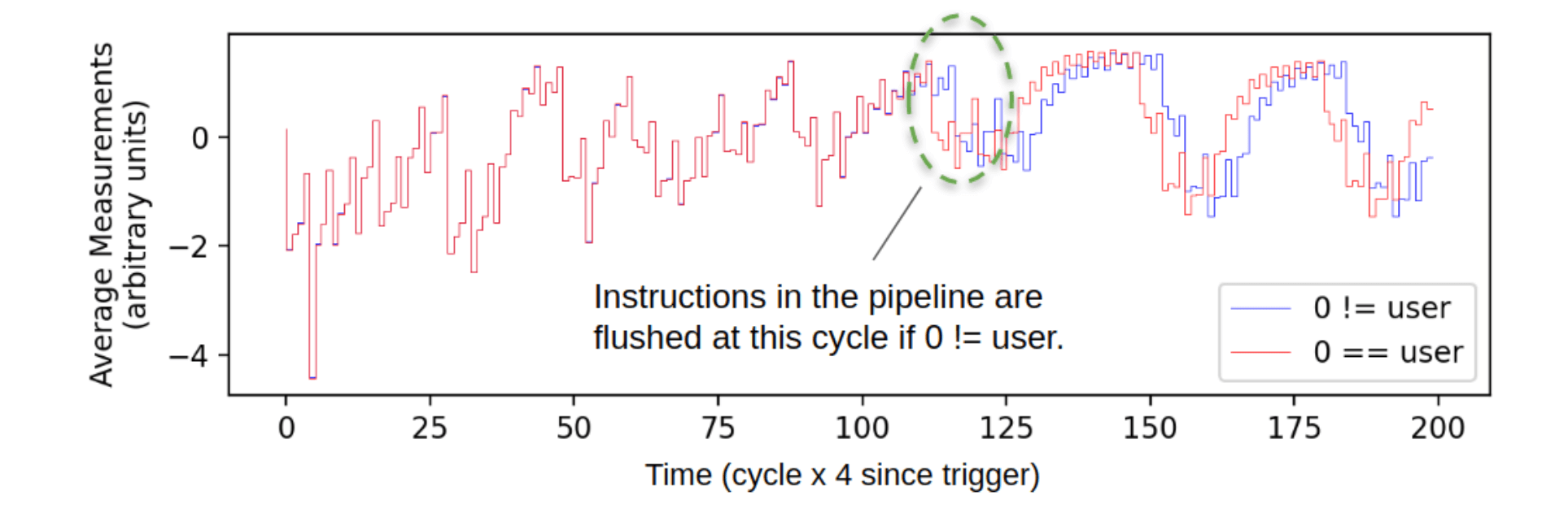

Figure 5 below shows the average power traces (arithmetic mean of 27K) collected from the ChipWhisperer board for each of the two major conditions. The expected delay due to the pipeline stall is visible as the phase shift between the red and blue lines at time index 113 which are in-phase at earlier times. This helps us to identify the exact time when the branch instruction occurs.

Figure 5: Average over 27K measurements from each of branch taken (blue) and branch not taken (red/control) conditions. A pipeline stall is visible when the branch is taken (blue line) at 113. y-axis units are arbitary due to normalization.

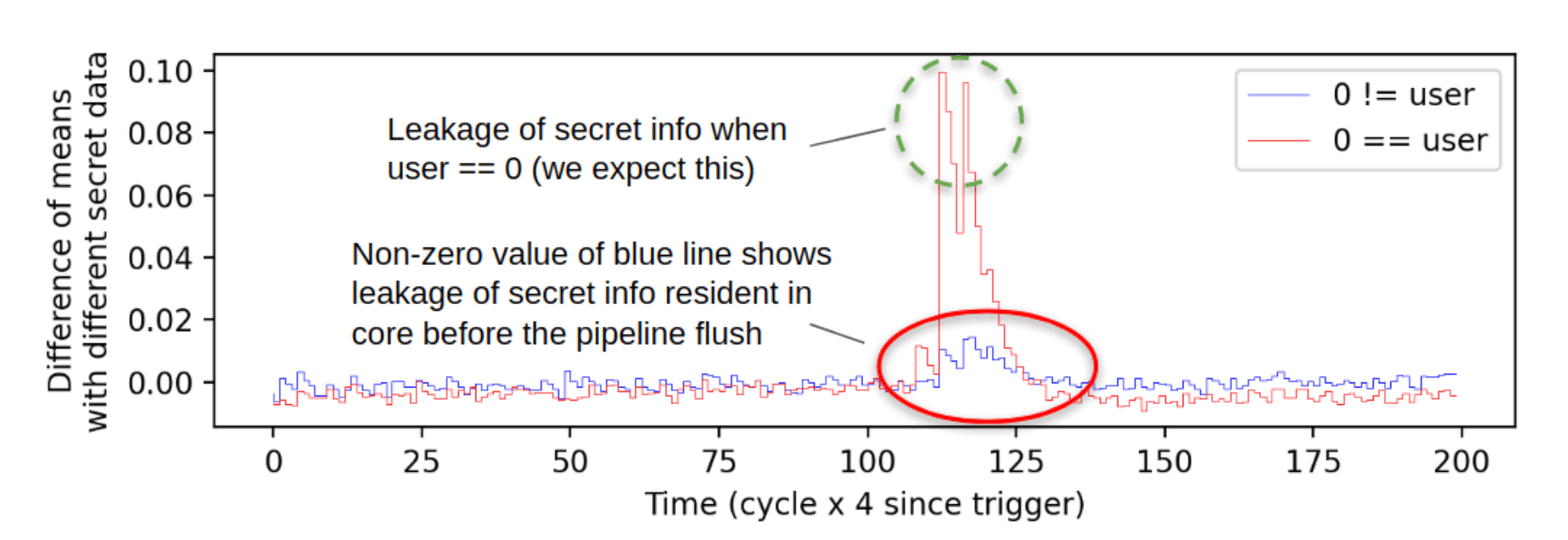

Figure 6 shows example differential traces (difference of means) between two of the 27 classes for the two major conditions. The largest peak in the red line is as we expect from the control case. It is well-known that processors leak information recoverable by PSCA during normal execution. This peak shows that data during execution produces a measurable difference in power consumption as reflected by the voltage in accordance with prior work.

The peak in the blue line during the same time period is from the secret data resident in the pipeline when the bubble “pops” and the prior results are squashed. From the software’s perspective, this information was never committed so this power side-channel leakage occurs independent of the running application.

Figure 6: Example peaks in a difference of means between two of the 27 classes. This is evidence of information leakage exploitable by PSCA.

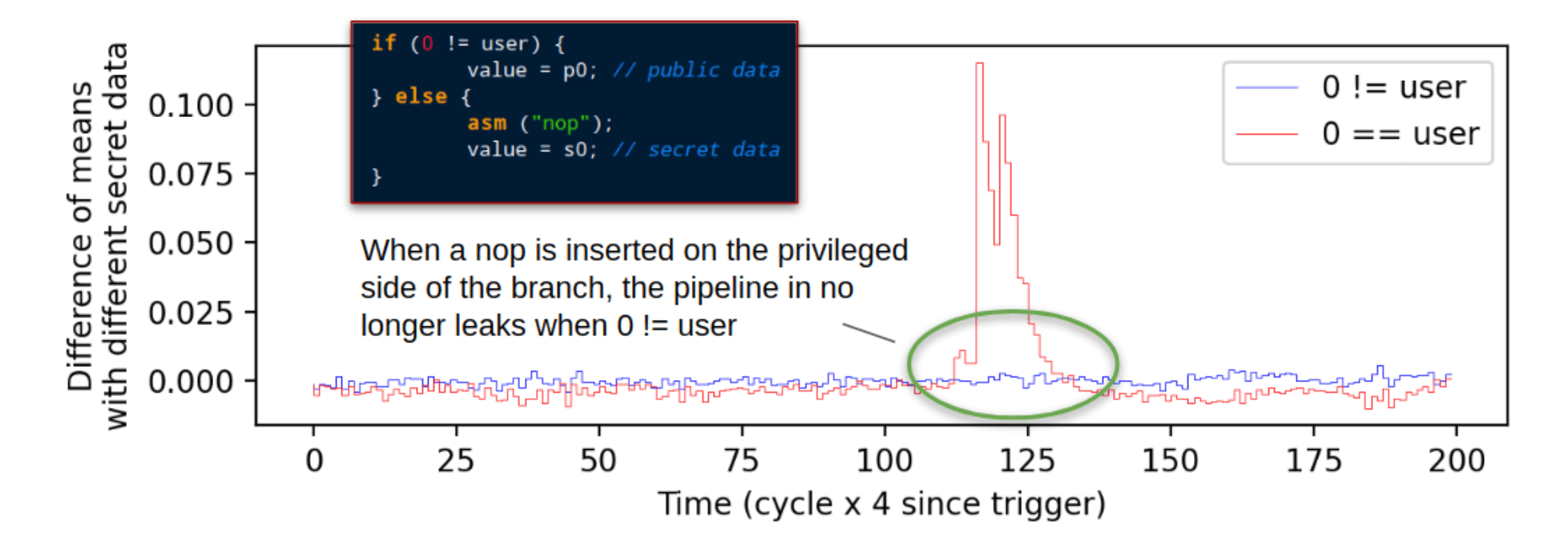

To further reinforce the evidence in support of our hypothesis, we insert

a nop into the C source of the attack target and collect 27K traces under this new condition. The modified code and differential traces are shown in Figure 7. The peak in the control data persists, while the smaller peak in the blue line is gone. This suggests that addition of the nop prevents data from the secret array from being pulled into the leakiest pipeline stage prior to branch resolution.

Figure 7: Data similar to that in Figure 6 except for the addition of the line asm (“nop”); after the else in the code shown in thw call-out box.

At this point, weaponization seems an appropriate confirmatory statistical approach.

Weaponization

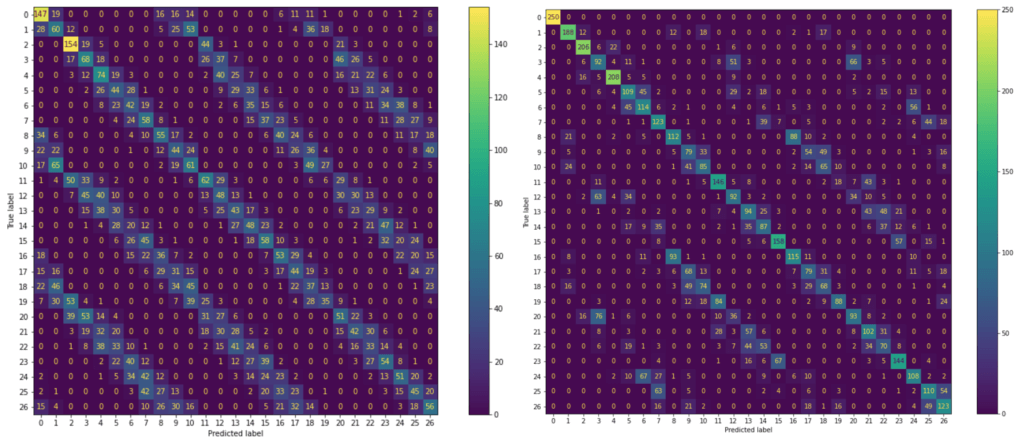

We train a 27-class nonlinear support vector machine on the 27 ASCII lowercase characters and the null byte by stratifying each class of 1K traces, holding out 250 traces for testing.

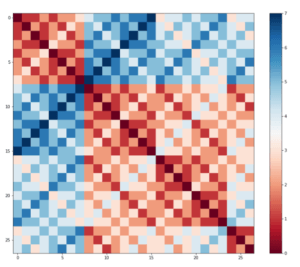

The confusion matrices in Figure 8 show classification results from the trained SVM with these 6750 testing traces (control on right). Each cell in a matrix holds the number of classifications of traces represented by their class labels [0,26] on the y-axis into classes with the corresponding label on the x-axis. High numbers on the main diagonal and small numbers in off-diagonal cells indicate good classification results.

Figure 8: Confusion matrices of 0!= user (left-pipeline squash) and 0 == user (right-control). See body text for details.

Classification in the control case is superior to that of the pipeline squash. Control traces belonging to class zero, corresponding to the null byte ”, are correctly classified in 100% of cases. This is consistent with a major contributor to classification success being the Hamming distance between values in the secret array. Hamming distance between register states is a well-known contributor to PSCA-exploitable leakage. It is therefore reasonable to assume that values with small Hamming distance from the previous pipeline state will be more easily misclassified, at least partially explaining the regularity of the striations of misclassified values in the right hand matrix.

However, in order to produce a matrix of Hamming distances with striations aligned with misclassifications in the control data (figure at right – darker red implies smaller Hamming distance), we must apply a -40 shift from the usual lowercase ASCII range of [97,122] into the range [57,82]. After this transformation the striations are not quite perfectly aligned, suggesting that other activity is consistently occurring within the SoC which weights the distance between traces accordingly.

Figure 9. Matrix of Hamming distances that best aligns with classification results for the control data.

The pipeline squash data (Figure 8 left) display a less identifiable misclassification pattern. Note that these data are 200-dimensional, and so there are many more possible origins for these patterns than those that are obvious at a glance. Despite this the pattern is akin to a less easily classified version of the control — the striations follow the same trends.

Broader Impacts of the Work

Our results show that the pipeline squash data are classifiable up to some unknown confusion factor. We hypothesize that the principal confusion factor is the Hamming distance between processor states during critical time instants where closer distances yield larger numbers of misclassified traces.

Our test case is constructed artificially to provoke a targeted class of information leak, however, this report demonstrates that a small amount of data motion can leak information exploitable though PSCA. Keeping in mind that real-world attacks commonly require thousands of power traces in order to perform a single classification, our results show that even with a single power trace the values moving through the processor can be distinguished. Moreover, this is true whether or not these data movements result in permanent or transient state change, and this leakage may be invisible from software.

This implies that PSCA is a hardware problem. A change of device microarchitecture on which a piece of PSCA-resistant software is running could invalidate countermeasures despite a consistent instruction set. Additionally, special modes of operation that might be engaged to act as PSCA countermeasures could be bypassed by clever manipulation of the pipeline along with other software-opaque design elements.

Benefits of Using Tortuga Logic’s Radix

We discussed in prior blogs the benefit of using Radix for speculative execution based vulnerabilities like Meltdown. Radix’s unique information flow technology detects the movement of secret assets throughout the microarchitecture. This movement of information directly contributes to the power consumption and resulting power side-channels. For the presented use case, this is pictorially shown below:

Figure 10: Illustration of potential information flows (red) through a Cortex-M4 in the presented scenario. Tortugua Logic’s Radix-S or Radix-M security verification tools will reveal locations in a microarchitecture where any speculated state is retained. Retained state is responsible for numerous security vulnerabilities including power side channel attacks.

Radix provides a unique methodology and analysis views to detect information flows in the design that may contribute to power side channels. Please refer to our Solutions page for more details.