Common Weakness Enumeration (CWE) is a community-developed list of software and hardware weakness types which may cause security issues. CWEs for software have been around since 2006 but the list of hardware weaknesses is new. The list is maintained by the MITRE organization and can be found here: cwe.mitre.org

A key question in verification is “What should I verify?” In Functional Verification, a high-level answer would be “Everything the design should do according to the specification”. While this is a very complex and time-consuming task, it is guided by a specification making the verification task, at least in theory, achievable.

The same question applied to Security Verification is much harder to answer. The high-level answer, in this case, would be “Anything that the design should NOT do”. A not-so-useful guide to what test cases or properties to write.

This is where the CWE list can help. The list currently contains 59 hardware security weaknesses. While not an exhaustive list, it is a great starting point. Start by verifying that your design doesn’t have any of the weaknesses in the CWE list. As you get experience writing security verification tests based on the CWE list, you will likely uncover additional areas in your design to verify.

Each CWE entry in the database has a description and potential mitigations. For example:

“The product behaves differently or sends different responses under different circumstances in a way that is observable to an unauthorized actor, which exposes security-relevant information about the state of the product, such as whether a particular operation was successful or not.”

This CWE example addresses side channel attacks and there are many others that address debug logic flaws, improper access control and HW / SW race-conditions. A vast majority of the weaknesses in the CWE list can be described and verified as information flow problems. For example, information about an encryption key should not flow through a timing side channel to an adversary or a CPU running unprivileged code should not be able to modify access control registers.

Verifying that certain information flows don’t exist in a design can be done by directed simulation testcases using multiple illegal values and scenarios. A much more effective method is to write information flow properties, describing which information flows are prohibited and including these properties in your functional simulations.

Example of Hardware Security Verification through CWE-1231

Let’s look at a more detailed example of how you can translate a CWE to a security rule you can include in your simulation regression runs.

“In integrated circuits and hardware IPs, device configuration controls are commonly programmed after a device power reset by a trusted firmware or software module (e.g., BIOS/bootloader) and then locked from any further modification. This is commonly implemented using a trusted lock bit, which when set disables writes to a protected set of registers or address regions. Design or coding errors in the implementation of the lock bit protection feature may allow the lock bit to be modified or cleared by software after being set to unlock the system.”

Depending on the actual design implementation there may be several vulnerabilities to verify. In this case there are two security vulnerabilities that needs to be verified here:

- A register protected by the lock bit cannot be modified when the lock bit is set

- The lock bit cannot be cleared by unprivileged software

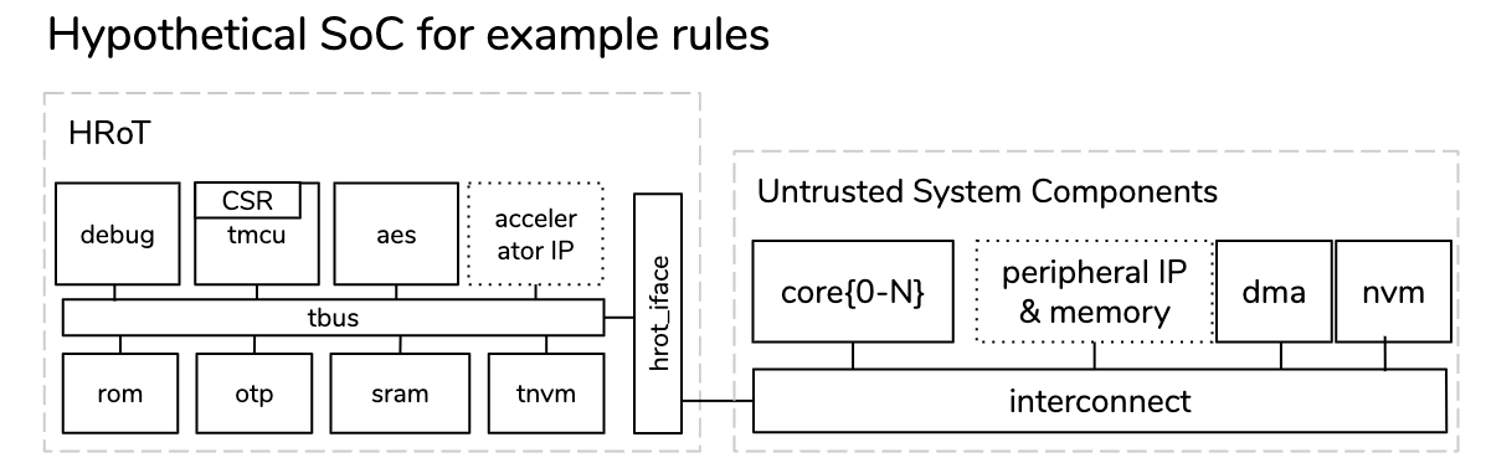

Consider the hypothetical SoC below:

It contains a thermal sensor with a programmable max temperature. Another register tmcu.csr.temp_shutdown determines the action to take when the max temperature is reached. If the register is programmed to “1”, the SoC is shut down to avoid malfunction or damage. The max temperature register and the temp_shutdown

register is protected by a lock bit. When trusted firmware sets the lock bit in tmcu.csr.reg_lock it is not possible to modify the registers. Due to a design bug, the temp_shutdown register is not protected by the lock bit.

The register lock bit must only be writable by the trusted tmcu processor, not by any of the untrusted cores.

Threat Model

Malicious software running on one of the untrusted cores can potentially do a fault injection attack by disabling the tmcu.csr.temp shutdown register thus allowing the device to overheat to a point where behavior is unpredictable and security features may no longer be active.

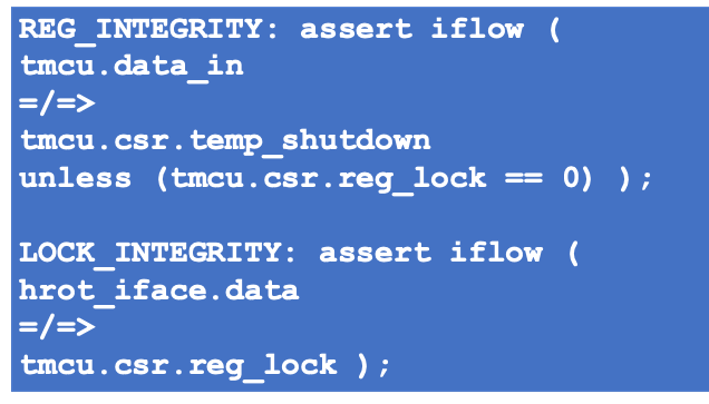

Radix Security Rules

The two requirements can be expressed as information flow problems i.e.:

- Information (data) cannot flow to a protected register when the lock bit is set

- Data cannot flow to the lock bit from any of the untrusted cores

Using the Radix “=/=>” no-flow operator and the “unless” keyword we can write the following security rules:

Verification

Using the security rules, Cycuity’s Radix tool will build a security model which when simulated together with the design will flag any violation of the rules.