In the world of semiconductor System-on-Chip (SoC) designs, it’s not uncommon to include undocumented features and control bits that can be used to enable or disable certain features. These elusive bits are commonly referred to as “chicken bits“. This allows for testing of new features that may not be ready for roll out to customers, or to disable functionality that negatively affects performance or does not work according to the specification.

While these bits offer flexibility and utility for the original designers, the control bits were never intended to be used by customers and are hence not documented. This may make the functional verification of the device more complex and less complete. The chicken bits may be controlling security functionality or features that interact with security functions and pose challenges for security assurance.

Do the undocumented features and bits have negative security implications by introducing weaknesses that can be exploited?

CWE-1242: “Inclusion of Undocumented Features or Chicken Bits” delves into this concern, acknowledging the security implications of these hidden functionalities. The Common Weakness Enumeration (CWE) entry provides an illustrative example of use of chicken bits, but the potential mitigation suggested— “The implementation of chicken bits in a released product is highly discouraged. If implemented at all, ensure that they are disabled in production devices. All interfaces to a device should be documented” — is often not practical. The example in the CWE does provide a solution for some situations where an authorization scheme can be used, but these bits and features will largely remain unknown and undocumented.

Undocumented or not, these bits need to be verified in terms of impact on security requirements. Can the confidentiality or integrity of security assets be compromised through the unknown chicken bits?

The fact that they are undocumented means that their presence is unknown and security teams and verification teams are left in the dark about their functionality. The simulation test suite is unlikely to activate and check an undocumented feature and they are not considered in security requirements.

This lack of visibility raises critical questions: How can you verify the presence and impact of something you are not aware of?

Information Flow Tracking

Addressing this challenge requires innovative approaches. One solution is to use information flow tracking of secure assets in the design, as used by Cycuity’s Radix technology. Since we are concerned about confidentiality and integrity of assets, it makes sense to analyze where in the design information from the assets can flow at all times. By visualizing the information flow, we can identify potential security weaknesses related to chicken bits or other unintended bits.

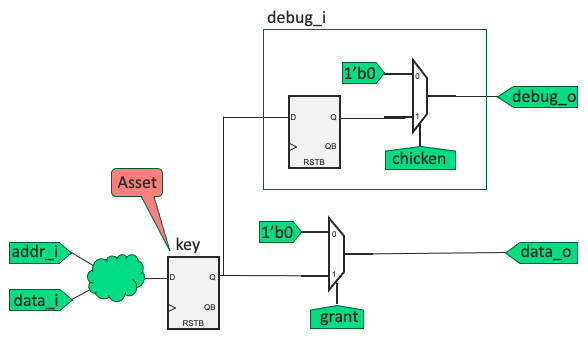

For example, consider the simplified design scenario below.

A cryptographic key is managed within the SoC. The secure asset, “key” is written to a register at a specific address and is driven on the data_o output only when grant is asserted. The content of the debug_i instance is undocumented and while logic to enable the chicken bit is implemented, it is unknown and not exercised in any of the simulation test cases. However, if an attacker were to gain insight into the functionality, enabling the chicken bit becomes a security weakness since the key will leak to the debug_o output which may be accessible by an attacker and would compromise confidentiality of the key. An information flow property checking confidentiality of the key with respect to all outputs when grant is not asserted will not report a violation since the “chicken bit” defaults to zero.

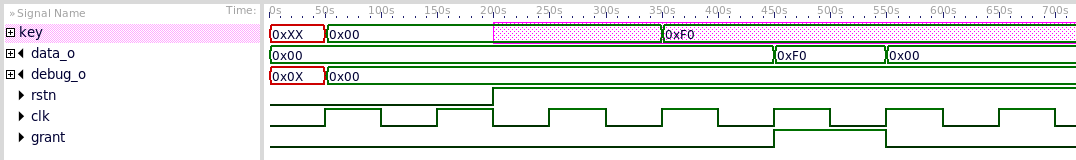

Red shading in the waveform figure above indicates that the signal carries information from the asset, in this case, the key register. We see that the key value "0xF0" is visible on the data_o output when grant is asserted as expected but information from the asset does not reach the debug_o output which would be a violation.

We would have to toggle the chicken bit in order to see information flow to the debug_o output but that would assume we know about it, but we don’t, so we need another approach. This also highlights a more general problem, namely, how do you identify unknown security weaknesses if you don’t know what to look for?

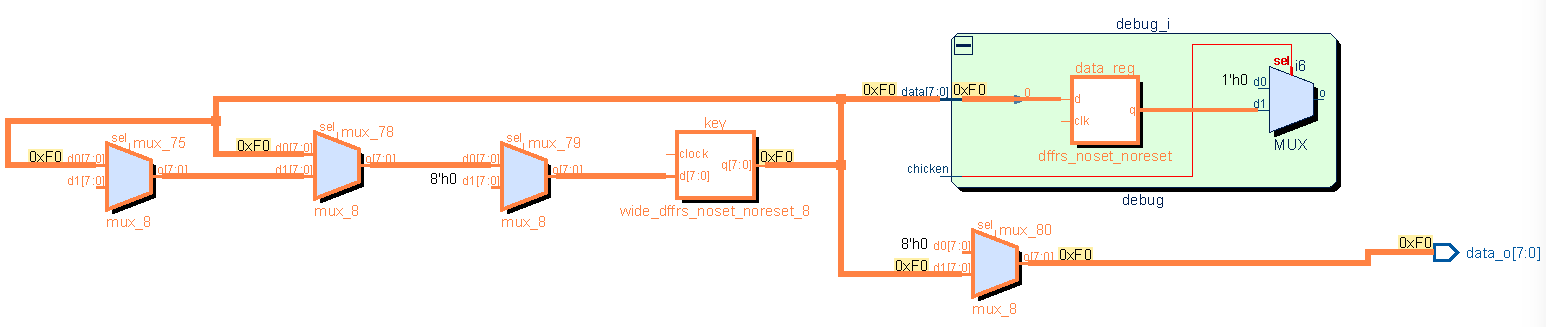

To get around this problem, let’s focus on the secure asset and ask the question, “where in the design does information from the key flow to and where should if flow to?” We can visualize all signals in the design which carry information from the asset at any time in schematic form.

Through this schematic visualization, where orange indicates information from the asset, we unveil that secret information flows to the debug_i instance, which is unexpected and undocumented, and this is a security weakness. There is a structural path to the debug_o output which means the key may leak through the debug instance. Having identified the debug_o output as a vulnerable output, we see that information flow stops at a mux which is controlled by the unknown “chicken” signal. This control path was previously unknown but once identified, it can be verified to determine if a security weakness exists. Knowing where information from an asset is supposed to flow ahead of time is difficult. It is much easier to review where it actually flows and determine if it poses a security risk or not.

In conclusion, leveraging information flow tracking of secure assets empowers us to identify one, or a few out of potentially hundreds of outputs that could be a security weakness even though the instance and its functionality driving the outputs – including chicken bits, was undocumented and unknown. By shedding light on these hidden elements, we fortify semiconductor SoC designs against potential threats, advancing the cause of security in the ever-evolving landscape of hardware development.